When Robots Colonize the Cosmos, Will They Be Conscious? (Op-Ed)

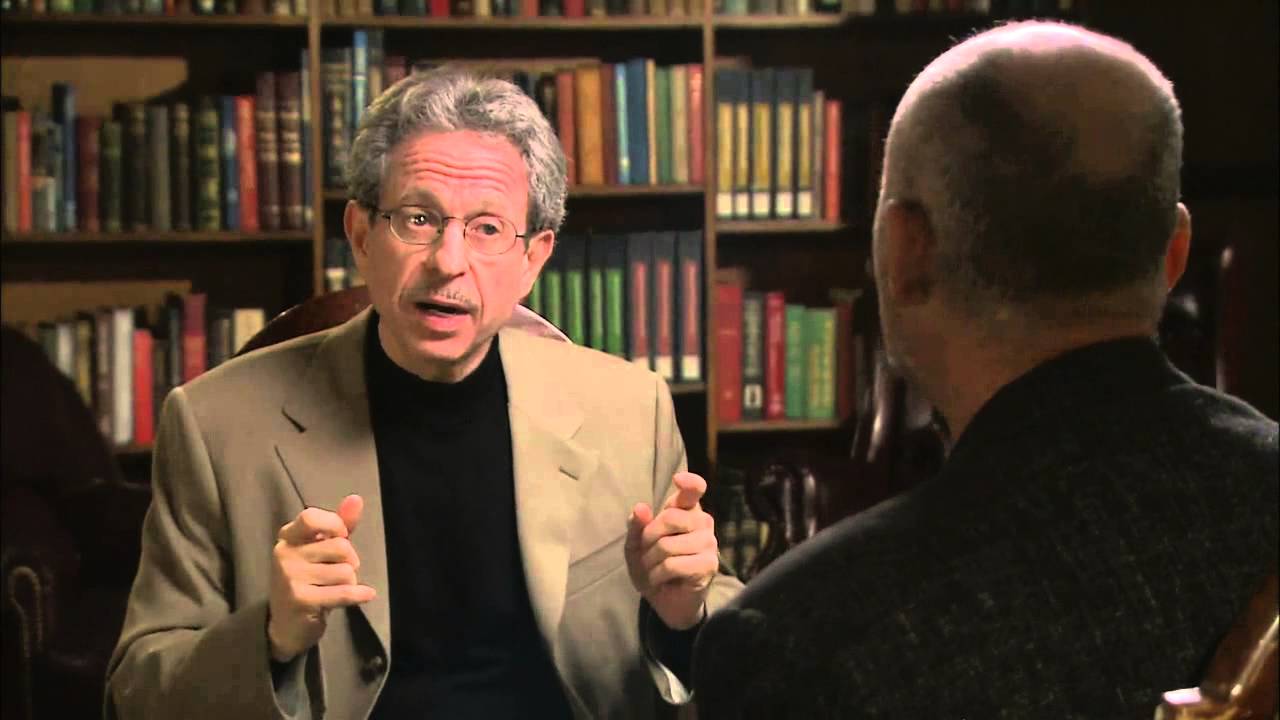

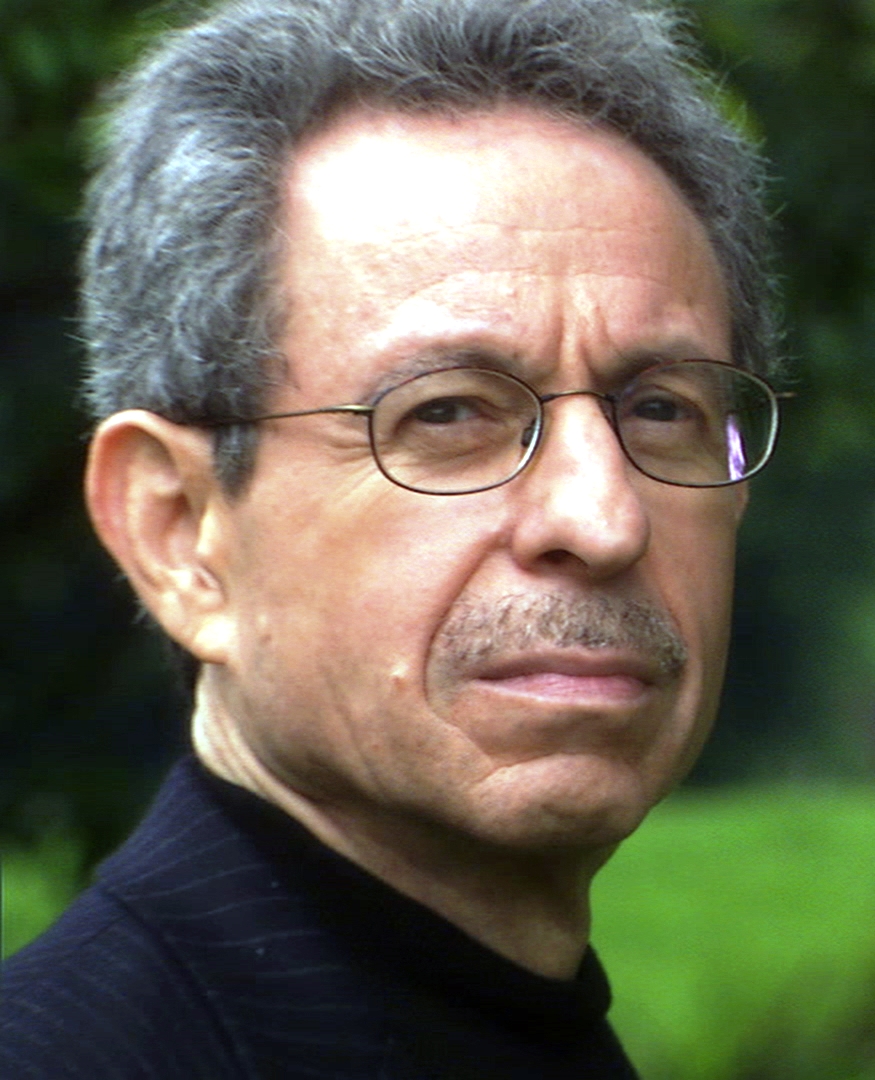

Robert Lawrence Kuhn is the creator, writer and host of "Closer to Truth," a public television series and online resource that features the world's leading thinkers exploring humanity's deepest questions. Kuhn is co-editor with John Leslie, of "The Mystery of Existence: Why Is There Anything at All?" (Wiley-Blackwell, 2013). This article is based on "Closer to Truth" interviews produced and directed by Peter Getzels and streamed at www.closertotruth.com. Kuhn contributed this article to Space.com's Expert Voices: Op-Ed & Insights.

The first colonizers of the cosmos will be robots, not humans. At some point, they will become self-replicating robots that construct multiple versions of themselves from the raw materials of alien worlds. They will increase their numbers exponentially and inexorably inhabit the totality of our galaxy. It may take a few million years, a fleeting moment in cosmic time. [Will We Ever Colonize Mars? (Op-Ed )]

But will these cosmos-colonizing robots — self-replicating robots called "von Neumann machines" after the mathematician John von Neumann — ever be conscious? In other words, will they ever have inner awareness? Will they ever experience the exploration of worlds without end?

Does it matter? I say that it does. I see the question of consciousness as a foundation of the philosophy of space travel. Because if robots become conscious, then the deep reason for humans to go to the stars becomes diminished. Why be burdened with the heavy freight needed to sustain biological life?

On the other hand, if robots can never be conscious, then we humans might have some kind of moral imperative to venture forth. A galaxy colonized by only mentally blank zombies does not seem an ultimate good.

So can robots ever be conscious?

I start by assuming the “Singularity,” when artificial intelligence (“AI”) will redesign itself recursively and progressively, such that AI will become vastly more powerful than human intelligence ("superstrong AI"). Techno-futurists assume, almost as an article of faith, that superstrong AI (post-singularity) will inevitably be conscious.

Get the Space.com Newsletter

Breaking space news, the latest updates on rocket launches, skywatching events and more!

I'm not so sure. Actually, I'm a skeptic — the deep cause of consciousness is the elephant in the room, and most techno-futurists do not see it.

What is consciousness?

Consciousness is a main theme of "Closer To Truth," and among the subtopics I discuss with scientists and philosophers on the program is the classic "mind-body problem" — what is the relationship between the mental thoughts in our minds and the physical brains in our heads? What is the deep cause of consciousness? (All quotes that follow are from "Closer To Truth.")

NYU Philosopher David Chalmers famously described the "hard problem" of consciousness: "Why does it feel like something inside? Why is all our brain processing — vast neural circuits and computational mechanisms — accompanied by conscious experience? Why do we have this amazing inner movie going on in our minds? I don't think the hard problem of consciousness can be solved purely in terms of neuroscience."

"Qualia" are the core of the mind-body-problem. "Qualia are the raw sensations of experience," Chalmers said. "I see colors — reds, greens, blues — and they feel a certain way to me. I see a red rose; I hear a clarinet; I smell mothballs. All of these feel a certain way to me. You must experience them to know what they're like. You could provide a perfect, complete map of my brain [down to elementary particles] — what's going on when I see, hear, smell — but if I haven't seen, heard, smelled for myself, that brain map is not going to tell me about the quality of seeing red, hearing a clarinet, smelling mothballs. You must experience it."

Can a computer be conscious?

To Berkeley philosopher John Searle, computer programs can never have a mind or be conscious in the human sense, even if they give rise to equivalent behaviors and interactions with the external world. (In Searle's "Chinese Room" argument, a person inside a closed space can use a rule book to match Chinese characters with English words and thus appear to understand Chinese, when, in fact, she does not.) But, I asked Searle, "Will it ever be possible, with hyperadvanced technology, for nonbiological intelligences to be conscious in the same sense that we are conscious? Can computers have 'inner experience'?" [Artificial Intelligence: Friendly or Frightening?]

"It's like the question, 'Can a machine artificially pump blood as the heart does?'" Searle responded. "Sure it can — we have artificial hearts. So if we can know exactly how the brain causes consciousness, down to its finest details, I don't see any obstacle, in principle, to building a conscious machine. That is, if you knew what was causally sufficient to produce consciousness in human beings and if you could have that [mechanism] in another system, then you would produce consciousness in that other system. Note that you don't need neurons to have consciousness. It's like saying you don't need feathers in order to fly. But to build a flying machine, you do need sufficient causal power to overcome the force of gravity."

"The one mistake we must avoid," Searle cautioned, "is supposing that if you simulate it, you duplicate it. A deep mistake embedded in our popular culture is that simulation is equivalent to duplication. But of course it isn't. A perfect simulation of the brain — say, on a computer — would be no more conscious than a perfect simulation of a rainstorm would make us all wet."

To robotics entrepreneur (and MIT professor emeritus) Rodney Brooks, "there's no reason we couldn't have a conscious machine made from silicon." Brooks' view is a natural consequence of his beliefs that the universe is mechanistic and that consciousness, which seems special, is an illusion. He claims that, because the external behaviors of a human, animal or even a robot can be similar, we "fool ourselves" into thinking "our internal feelings are so unique."

Can we ever really assess consciousness?

"I don't know if you're conscious. You don't know if I'm conscious," said Princeton University neuroscientist Michael Graziano. "But we have a kind of gut certainty about it. This is because an assumption of consciousness is an attribution, a social attribution. And when a robot acts like it's conscious and can talk about its own awareness, and when we interact with it, we will inevitably have that social perception, that gut feeling, that the robot is conscious.

"But can you really ever know if there's 'anybody home' internally, if there is any inner experience?" he continued. "All we do is compute a construct of awareness."

Warren Brown, a psychologist at Fuller Theological Seminary and a member of UCLA's Brain Research Institute, stressed "embodied cognition, embodied consciousness," in that "biology is the richest substrate for embodying consciousness." But he didn't rule out that consciousness "might be embodied in something nonbiological." On the other hand, Brown speculated that "consciousness may be a particular kind of organization of the world that just cannot be replicated in a nonbiological system."

Neuroscientist Christof Koch, president and chief scientific officer of the Allen Institute for Brain Science, disagrees. "I am a functionalist when it comes to consciousness," he said. "As long as we can reproduce the [same kind of] relevant relationships among all the relevant neurons in the brain, I think we will have recreated consciousness. The difficult part is, what do we mean by 'relevant relationships'? Does it mean we have to reproduce the individual motions of all the molecules? Unlikely. It's more likely that we have to recreate all the [relevant relationships of the relevant] synapses and the wiring ("connectome") of the brain in a different medium, like a computer. If we can do all of this at the right level, this software construct would be conscious."

I asked Koch if he'd be "comfortable" with nonbiological consciousness.

"Why should I not be?" he responded. "Consciousness doesn't require any magical ingredient."

Radical visions of consciousness

A new theory of consciousness — developed by Giulio Tononi, a neuroscientist and psychiatrist at the University of Wisconsin (and supported by Koch) — is based on "integrated information" such that distinct conscious experiences are represented by distinct structures in a heretofore unknown kind of space. "Integrated information theory means that you need a very special kind of mechanism organized in a special kind of way to experience consciousness," Tononi said. "A conscious experience is a maximally reduced conceptual structure in a space called 'qualia space.' Think of it as a shape. But not an ordinary shape — a shape seen from the inside."

Tononi stressed that simulation is "not the real thing." To be truly conscious, he said, an entity must be "of a certain kind that can constrain its past and future — and certainly a simulation is not of that kind."

Inventor and futurist extraordinaire Ray Kurzweil believes that "we will get to a point where computers will evidence the rich array of emotionally subtle types of behavior that we see in human beings; they will be very intelligent, and they will claim to be conscious. They will act in ways that are conscious; they will talk about their own consciousness and argue about it just the way you and I do. And so the philosophical debate will be whether or not they really are conscious — and they will be participating in the debate."

Kurzweil argues that assessing the consciousness of other [possible] minds is not a scientific question. "We can talk scientifically about the neurological correlates of consciousness, but fundamentally, consciousness is this subjective experience that only I can experience. I should only talk about it in first-person terms (although I've been sufficiently socialized to accept other people's consciousness). There's really no way to measure the conscious experiences of another entity."

"But I would accept that these nonbiological intelligences are conscious," Kurzweil concluded. "And that'll be convenient, because if I don't, they'll get mad at me."

Conscious Robots

It is my claim that whether robotic probes colonizing the cosmos are conscious or not conscious radically affects the nature of such colonization. It would differ profoundly, I submit, in the case where the robots were literally conscious, with humanlike inner awareness and experiencing the cosmos, from the case where they were not literally conscious (with no inner awareness and not experiencing anything) — even though in both cases their superstrong AI would be vastly more powerful than human intelligence and by all accounts they would appear to be equally conscious.

I agree that after superstrong AI exceeds some threshold, science could never, even in principle, distinguish actual inner awareness from apparent inner awareness, say in our cosmos-colonizing robots. But I do not agree with what usually follows: that this everlasting uncertainty about inner awareness and conscious experience in other entities (nonbiological or biological) makes the question irrelevant. I think the question maximally relevant. Unless our robotic probes were literally conscious, even if they were to colonize every object in the galaxy, the absence of inner experience would mean a diminished intrinsic worth.

That's why the deep cause of consciousness is critical.

Alternative causes of consciousness

Through my conversations (and decades of night-musings), I have arrived at five alternative causes of consciousness (there may be others). Traditionally, the choice is between physicalism/materialism (No. 1 below) and dualism (No. 4), but the other three possibilities deserve consideration.

- Consciousness is entirely physical, solely the product of physical brain, which, at its deepest levels, comprises the fields and particles of fundamental physics. This is "physicalism" or "materialism," and it is overwhelmingly the prevailing theory of scientists. To many materialists, the utter physicality of consciousness is more an assumed premise than a derived conclusion.

- Consciousness is an independent, nonreducible feature of physical reality that exists in addition to the fields and particles of fundamental physics. This may take the form of a new, independent (fifth?) physical force or of a radically new organization of reality (e.g., 'qualia space' as postulated by integrated information theory).

- Consciousness is a nonreducible feature of each and every physical field and particle of fundamental physics. Everything that exists has a kind of "proto-consciousness," which, in certain aggregates and under certain conditions, can generate human-level inner awareness. This is "panpsychism," one of the oldest theories in philosophy of mind (going back to pre-modern animistic religions and the ancient Greeks). Panpsychism, in various forms, is an idea being revived by some contemporary philosophers in response to the seemingly intractable "hard problem" of consciousness.

- Consciousness requires a radically separate, nonphysical substance that is independent of a physical brain, such that reality consists of two radically disparate parts — physical and nonphysical substances, divisions, dimensions or planes of existence. This is "dualism." While human consciousness requires both a physical brain and this non-physical substance (somehow working together), following the death of the body and the dissolution of the brain, this nonphysical substance of or by itself could maintain some kind of conscious existence. (Though this nonphysical substance is traditionally called a “soul” — a term that carries heavy theological implications — a soul is not the only kind of thing that such a nonphysical substance could be.)

- Consciousness is ultimate reality; the only thing that's really real is consciousness — everything, including the entire physical world, is derived from an all-encompassing "cosmic consciousness." Each individual instance of consciousness — human, animal, robotic or otherwise — is a part of this cosmic consciousness. Eastern religions, in general, espouse this kind of view. (See Deepak Chopra for contemporary arguments that ultimate reality is consciousness.)

Will superstrong AI be conscious?

I'm not going to evaluate each competing cause of consciousness. (That would require a course, not a column.) Rather, for each cause, I'll speculate whether nonbiological intelligences, such as cosmos-colonizing robots, with superstrong AI (post-singularity) could be conscious and possess inner awareness.

- If consciousness is entirely physical, then it would be almost certainly true that nonbiological intelligences with superstrong AI would have the same kind of inner awareness that we do. Moreover, as AI would rush past the singularity and become ineffably more sophisticated than the human brain, it would likely express forms of consciousness higher than we today could even imagine.

- If consciousness is an independent, nonreducible feature of physical reality, then it would remain an open question whether nonbiological intelligences could ever experience true inner awareness. (It would depend on the deep nature of the consciousness-causing feature and whether this feature could be manipulated by technology.)

- If consciousness is a nonreducible property of each and every elementary physical field and particle (panpsychism), then it would seem likely that nonbiological intelligences with superstrong AI could experience true inner awareness (because consciousness would be an intrinsic part of the fabric of physical reality).

- If consciousness is a radically separate, nonphysical substance not causally determined by the physical world (dualism), then it would seem impossible that superstrong AI (alone), no matter how advanced, could ever experience true inner awareness.

- If consciousness is ultimate reality (cosmic consciousness), then anything could be (or is) conscious (whatever that may mean), including nonbiological intelligences.

Remember, in each of these cases, no one could detect, using any conceivable scientific test, whether the nonbiological intelligences with superstrong AI had the inner awareness of true consciousness.

In all aspects of behavior and communications, these nonbiological intelligences, such as our cosmos-colonizing robots, would seem to be equal to (or superior to) humans. But if these nonbiological intelligences did not, in fact, have the felt sense of inner experience, they would be "zombies" ("philosophical zombies" to be precise), externally identical to conscious beings, but nothing inside.

And this dichotomy elicits (a bit circularly) our probative questions about self-replicating robots that will inexorably colonize the cosmos. Post-singularity, will superstrong AI without inner awareness be in all respects just as powerful as superstrong AI with inner awareness, and in no respects deficient? In other words, are there kinds of cognition that, in principle or of necessity, require true consciousness? This could affect what it means fundamentally to colonize the cosmos. Moreover, would true conscious experience and inner awareness in these galaxy-traversing robots represent a higher form of “intrinsic worthiness,” some kind of absolute, universal value (however anthropomorphic this may seem)? For assessing the deep nature of robotic probes colonizing the cosmos, the question of consciousness is profound.

Is virtual immortality possible?

There's a further question, an odd one. Assuming that our cosmos-colonizing robots could become conscious, I can make the case that such galaxy-traveling consciousness could include you – yes you, your first-person inner awareness, exploring the cosmos virtually forever.

Here's the argument in brief. If robotic consciousness is possible, then consciousness must be entirely physical (No. 1 above), from which it follows that “virtual immortality” is possible (No. 1 below). This means that human personality can be uploaded (ultimately) into space probes and we ourselves can colonize the cosmos!

According to techno-futurists, the accelerating development of technology, including the complete digital replication of human brains, can enable virtual immortality, when the fullness of our first-person mental selves (our “I”) can be digitized and uploaded perfectly to nonbiological media (such as solid-state drives in space robots), and our mental selves can live on beyond the demise and decay of our fleshy, mushy, physical brains.

If so, I'd see no reason why we couldn't choose where we would like our virtual immortality to be housed, and if we choose a cosmos-colonizing robot, we could experience the galactic journeys through robotic senses (while at the same time 'enjoying' our virtual world, especially during those eons of dead time traveling between star systems).

But remember, I'm a skeptic - because whether virtual immortality can be even possible is determined by the cause of consciousness. So again, we can assess the possibilities for virtual immortality by analyzing each of the five alternative causes of consciousness.

1. If consciousness is entirely physical, then our first-person mental self would be uploadable, and some kind of virtual immortality would be attainable. The technology might take hundreds or thousands of years — not decades, as techno-optimists believe — but barring human-wide catastrophe, it would happen.

2. If consciousness is an independent, nonreducible feature of physical reality, then it would be possible that our first-person mental self could be uploadable — though less clearly than in No. 1 above, because not knowing what this consciousness-causing feature would be, we could not know whether it could be manipulated by technology, no matter how advanced. But because consciousness would still be physical, efficacious manipulation and successful uploading would seem possible.

3. If consciousness is a nonreducible feature of each and every elementary physical field and particle (panpsychism), then it would seem probable that our first-person mental self would be uploadable, because there would probably be regularities in the way particles would need to be aggregated to produce consciousness, and if regularities, then advanced technologies could learn to control them.

4. If consciousness is a radically separate, nonphysical substance (dualism), then it would seem impossible to upload our first-person mental self by digitally replicating the brain, because a necessary cause of our consciousness, this nonphysical component, would be absent.

5. If consciousness is ultimate reality, then consciousness would exist of itself, without any physical prerequisites. But would the unique digital pattern of a complete physical brain (derived, in this case, from consciousness) favor a specific segment of the cosmic consciousness (i.e., our unique first-person mental self)? It's not clear, in this extreme case, that uploading would make much difference (or much sense).

Whereas most neuroscientists assume that whole brain replication can achieve virtual immortality, Giulio Tononi is not convinced. According to his theory of integrated information, "what would most likely happen is, you would create a perfect 'zombie' — somebody who acts exactly like you, somebody whom other people would mistake for you, but you wouldn't be there."

In trying to distinguish among these alternatives, I am troubled by a simple observation. Assume that a perfect digital replication of my brain does, in fact, generate human-level consciousness (surely alternative 1, possibly 2, probably 3, not 4, 5 doesn't matter). This would mean that my first-person self and personal awareness could be uploaded to a new medium (nonbiological or even, for that matter, a new biological body). But if "I" can be replicated once, then I can be replicated twice; and if twice, then an unlimited number of times.

So, what happens to my first-person inner awareness? What happens to my "I"?

Assume I do the digital replication procedure and it works perfectly — say, five times.

Where is my first-person inner awareness located? Where am I?

Each of the five replicas would state with unabashed certainty that he is "Robert Kuhn," and no one could dispute them. (For simplicity of the argument, physical appearances of the clones are neutralized.) Inhabiting my original body, I would also claim to be the real “me,” but I could not prove my priority. (See David Brin's novel "Kiln People" (Tor 2003), a thought experiment about "duplicates," and his comments on personal identity.)

I'll frame the question more precisely. Comparing my inner awareness from right before to right after the replications, will I feel or sense differently? Here are four obvious possibilities, with their implications:

- I do not sense any difference in my first-person awareness. This would mean that the five replicates are like super-identical twins — they are independent conscious entities, such that each begins instantly to diverge from the others. This would imply that consciousness is the local expression or manifestation of a set of physical factors or patterns. (An alternative explanation would be that the replicates are zombies, with no inner awareness — a charge, of course, they will deny and denounce.)

- My first-person awareness suddenly has six parts — my original and the five replicates in different locations — and they all somehow merge or blur together into a single conscious frame, the six conscious entities fusing into a single composite (if not coherent) "picture." In this way, the unified effect of my six conscious centers would be like the "binding problem" on steroids. (The binding problem in psychology asks how do our separate sense modalities like sight and sound come together such that our normal conscious experience feels singular and smooth, not built up from discrete, disparate elements). This would mean that consciousness has some kind of overarching presence or a kind of supra-physical structure.

- My personal first-person awareness shifts from one conscious entity to another, or fragments, or fractionates. These states are logically (if remotely) possible, but only, I think, if consciousness would be an imperfect, incomplete emanation of evolution, devoid of fundamental grounding.

- My personal first-person awareness disappears upon replication, although each of the six (original plus five) claims to be the original and really believes it. (This, too, would make consciousness even more mysterious.)

Suppose, after the replicates are made, the original (me) is destroyed. What then? Almost certainly my first-person awareness would vanish, although each of the five replicates would assert indignantly that he is the real "Robert Kuhn" and would advise, perhaps smugly, not to fret over the deceased and discarded original.

At some time in the future, assuming that the deep cause of consciousness permits this, the technology will work. If I were around, would I upload my consciousness to a cosmos-colonizing robot? I might, because I'd be confident that 1 (above) is true and 2, 3 and 4 are false, and that the replication procedure would not affect my first-person mental self one whit. So while it wouldn't feel like me (as I know me), I'd kind of enjoy sending 'Robert Kuhn' out there exploring star systems galore. (There's more. If my consciousness is entirely physical and can be uploaded, it can be uploaded to as many cosmos-colonizing robots as I'd like — or can afford. It gets crazy.)

Bottom line: whether nonbiological entities such as robots can be conscious, or not, presents us with two disjunctive possibilities, each with profound consequences. If robots can never be conscious, then there may be a greater moral imperative for human beings to colonize the cosmos. If robots can be conscious, then there may be less reason for humans to explore space in the body — but your personal consciousness could be uploaded into cosmos-colonizing robots, probably into innumerable such galactic probes, and you yourself (or your clones) could colonize the cosmos. (Or is something else going on?)

Follow all of the Expert Voices issues and debates — and become part of the discussion — on Facebook, Twitter and Google+. The views expressed are those of the author and do not necessarily reflect the views of the publisher. This version of the article was originally published on Space.com.

Join our Space Forums to keep talking space on the latest missions, night sky and more! And if you have a news tip, correction or comment, let us know at: community@space.com.